Managing user accounts can be a bit confusing, when working with MySQL. The two main commands in that context: CREATE USER and GRANT. Obviously, CREATE user is for adding a new user while GRANT is used for assigning permissions.

Pre-requisits: Docker Compose

For testing access to a MySQL instance, we can use Docker for running said instance and two different client containers. The docker-compose snipped below orchestrates those three containers and puts them on a shared network.

version: '3'

services:

mysql-db:

image: mysql:8

container_name: mysql-8-server

restart: unless-stopped

command: --default-authentication-plugin=mysql_native_password

environment:

MYSQL_DATABASE: 'world'

MYSQL_ALLOW_EMPTY_PASSWORD: 'yes'

ports:

- '3308:3306'

expose:

- '3306'

volumes:

- my-data-volume:/var/lib/mysql

- ./demo_data/world.sql:/docker-entrypoint-initdb.d/world.sql:ro

networks:

mysql-test-net:

ipv4_address: 172.28.1.2

mysql-client-1:

image: alpine-mysql:latest

container_name: client-1

restart: unless-stopped

command: tail -f /dev/null

networks:

mysql-test-net:

ipv4_address: 172.28.1.3

mysql-client-2:

image: alpine-mysql:latest

container_name: client-2

restart: unless-stopped

command: tail -f /dev/null

networks:

mysql-test-net:

ipv4_address: 172.28.1.4

volumes:

my-data-volume:

networks:

mysql-test-net:

ipam:

driver: default

config:

- subnet: 172.28.0.0/16

The docker-compose script is pretty self explainatory. We use a MySQL 8 container, that allows connecting with the root user and an empty password. We persist the data of the MySQL database in a seperate data volume called my-data-volume and we also load the example database called world which we downloaded before.

In order to have a nice and simple image for the clients., we can use the Alpine image and pre-install the MySQL client inside it, by using the following Dockerfile.

FROM alpine:3.7

RUN apk add --no-cache mysql-client

You need to build it once on your host with the command

docker build --tag alpine-mysql:latest .

After starting docker-compose with docker-compose up, We end up with three containers:

- mysql-8-server

- client-1

- client-2

MySQL User Accounts, Access and Permissions

In this blog post, I would like to highlight some issues that arise when you add accounts for users that can connect from different hosts. Our docker container accepts connections for root from any host. In the wild, this is of course bad practice and we are going to change that as soon as we have added a second account.

Connect to the MySQL server by starting the MySQL client inside the container client-1 and point it to the right host by providing the hostname of the database server. Docker resolves the container names for us nicely, because all three containers are inside the same network. In order to improve the readability, we also changed the prompt so that it is more obvious from which client we are connecting to.

$ docker exec -it client-1 mysql -uroot -h mysql-8-server --prompt "Client 1 >"

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 9

Server version: 8.0.12 MySQL Community Server - GPL

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

Client 1 >

The Root User

Lets make our example more secure by allowing root to connect only from the machine where the database instance is running at. Being still connected from client-1 as root, we can change the permissions. But lets have a look what users are there anyways.

Client 1 >SELECT User, Host, authentication_string FROM mysql.user\G

*************************** 1. row ***************************

User: root

Host: %

authentication_string:

*************************** 2. row ***************************

User: mysql.infoschema

Host: localhost

authentication_string: $A$005$THISISACOMBINATIONOFINVALIDSALTANDPASSWORDTHATMUSTNEVERBRBEUSED

*************************** 3. row ***************************

User: mysql.session

Host: localhost

authentication_string: $A$005$THISISACOMBINATIONOFINVALIDSALTANDPASSWORDTHATMUSTNEVERBRBEUSED

*************************** 4. row ***************************

User: mysql.sys

Host: localhost

authentication_string: $A$005$THISISACOMBINATIONOFINVALIDSALTANDPASSWORDTHATMUSTNEVERBRBEUSED

*************************** 5. row ***************************

User: root

Host: localhost

authentication_string:

5 rows in set (0.00 sec)

As we can see there are five preinstalled accounts The users mysql.infoschema, mysql.session and mysql.sys are internal accounts that cannot be used for client connections. Do not delete them though, they are needed for internal tasks. Then there are two root accounts: ‘root’@’%’ and ‘root’@’localhost’. The first one is the problematic one, because with the wildcard ‘%’, clients can connect from any remote computer. We definetely do not want that.

So lets drop it:

DROP USER 'root'@'%';

When you now run the SELECT query again, you will notice that it still works. Even after you run FLUSH PRIVILEGES. The reason is that the server will verify the connection settings only after the next time the client connects. So lets exit the session and try to connect again.

$ docker exec -it client-1 mysql -uroot -h mysql-8-server --prompt "Client 1 >"

ERROR 1130 (HY000): Host '172.28.1.3' is not allowed to connect to this MySQL server

As expected, we cannot connect from a remote server anymore. Lets login using MySQL container.

docker exec -it mysql-8-server mysql -uroot -h localhost --prompt "Server >"

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 11

Server version: 8.0.12 MySQL Community Server - GPL

Copyright (c) 2000, 2018, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

Server >

Note how we are now connecting to the mysql-8-server and using localhost as the hostname.

Localhost vs 127.0.0.1

In the example above we connected using localhost as the hostname. When we try the same with the IP address 127.0.0.1 of the loopback interface, which is often seen as synonymous, it does not work anymore.

docker exec -it mysql-8-server mysql -uroot -h 127.0.0.1 --prompt "Server >"ERROR 1130 (HY000): Host '127.0.0.1' is not allowed to connect to this MySQL server

This is confusing, after all (after using apt-get install inetutils-ping for installing the tool inside the MySQL container), we can verify that localhost resolves to 127.0.0.1.

But there is more to that. First of all, MySQL can use hostname resolution and would make a DNS request to get the IP address from a hostname. As this can be pretty slow, it is turned off by default, as you can verify like this:

Server >show variables like 'skip_name_resolve';

+-------------------+-------+

| Variable_name | Value |

+-------------------+-------+

| skip_name_resolve | ON |

+-------------------+-------+

1 row in set (0.00 sec)

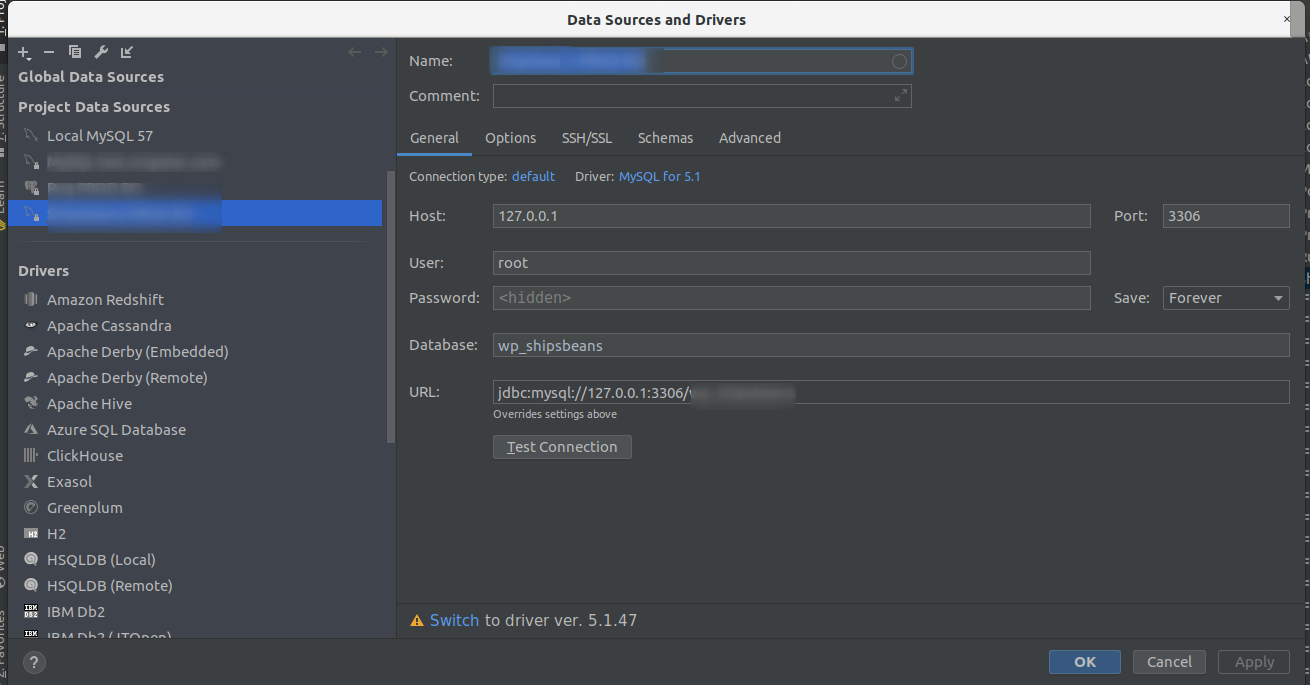

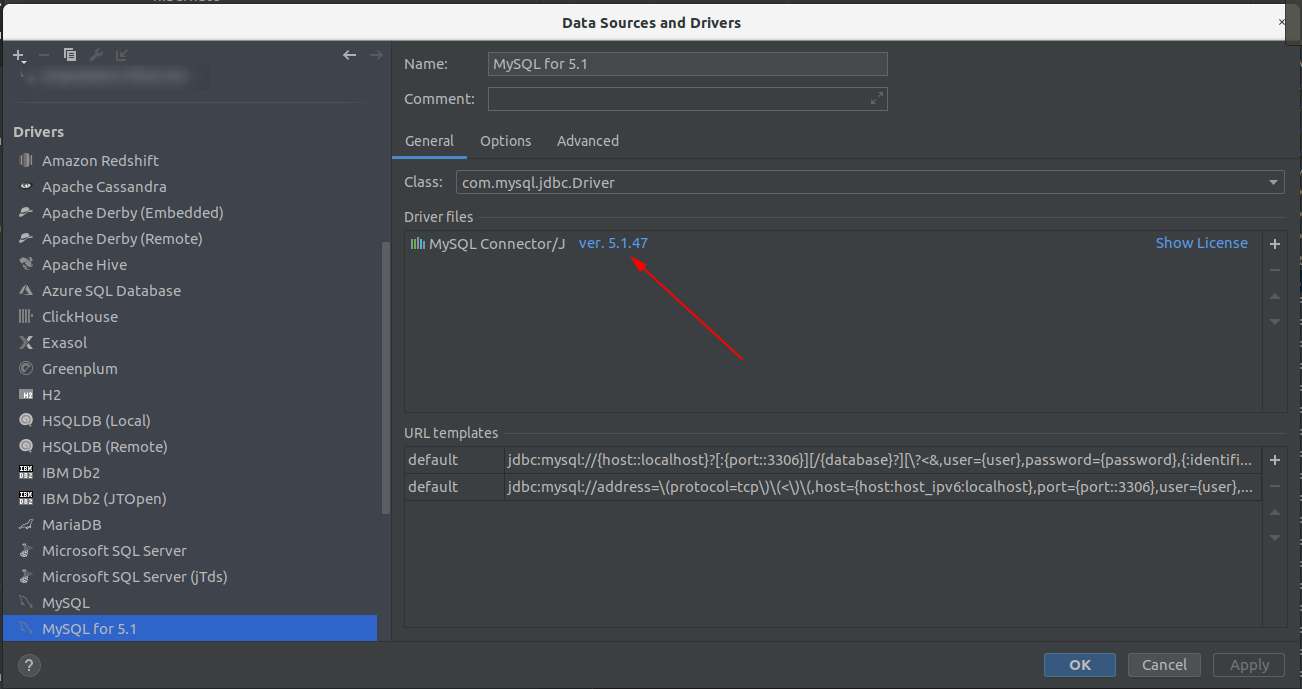

Further more, localhost is a special hostname for MySQL. You can connect to MySQL either by using a TCP connection or a file socket. The file socket only works on the same machine as the server is running and it is the faster method. This is why MySQL treats connections coming from localhost per default as socket connections. If you want to use 127.0.0.1, you can add this as a second host for the existing user using GRANT.

Server >GRANT ALL PRIVILEGES ON *.* TO 'root'@'127.0.0.1';

ERROR 1410 (42000): You are not allowed to create a user with GRANT

Now thats weird? After all, we are root and should be allowed to do anything, but we see this error message above. The reason is that there does not yet exist an account for ‘root’@’127.0.0.1’ yet.

Server >CREATE USER 'root'@'127.0.0.1' IDENTIFIED BY 'super-secret';

Query OK, 0 rows affected (0.07 sec)

Server >GRANT ALL PRIVILEGES ON *.* to 'root'@'127.0.0.1';

Query OK, 0 rows affected (0.07 sec)

Server >GRANT GRANT OPTION ON *.* to 'root'@'127.0.0.1';

Query OK, 0 rows affected (0.08 sec)

You always need to create a user account first before setting permissions with GRANT.

While we are at it, we can also set a password for root. Note how we set the password for both root accounts. Accounts are always identified with ‘username’@’hostname’.

Server >ALTER USER 'root'@'localhost' IDENTIFIED BY 'super-secret';

Server >ALTER USER 'root'@'127.0.0.1' IDENTIFIED BY 'super-secret';

Adding Users

Let’s logon with our new credentials again and create a new user called bob and add some permissions for the world database.

CREATE USER 'bob' IDENTIFIED BY 'secret';

What looks pretty harmless adds an account for the user called bob. Without adding a host, MySQL simply adds the wildcard for hosts and allows therefore connecting to the MySQL instance from any IP address. You can verify it with the follwing command that I am going to use more often in the course of this post.

Server >SHOW GRANTS FOR 'bob';

+---------------------------------+

| Grants for bob@% |

+---------------------------------+

| GRANT USAGE ON *.* TO `bob`@`%` |

+---------------------------------+

1 row in set (0.00 sec)

Thats not what we had in mind. Lets delete bob’s account again and create it with a proper host.

DROP USER 'bob';

CREATE USER 'bob'@'172.28.1.3' IDENTIFIED BY 'secret';

Note how we limited the account to allow connections only from Client 1, by provoding its IP. You can’t connect from Client 2.

stefan@stefan-desktop:~/Docker-Projects/MySQL-Demo$ docker exec -it client-1 mysql -ubob -h mysql-8-server -psecret --prompt "Client 1 >"

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 14

Server version: 8.0.12 MySQL Community Server - GPL

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

Client 1 >exit

Bye

stefan@stefan-desktop:~/Docker-Projects/MySQL-Demo$ docker exec -it client-2 mysql -ubob -h mysql-8-server -psecret --prompt "Client 2 >"

ERROR 1045 (28000): Access denied for user 'bob'@'172.28.1.4' (using password: YES)

This are the very basics of MySQL account management. In the future I plan to go into more details.