The average computer users needs to memorize at least 17 passwords for private accounts. Power users need to handle several additional accounts for work too and memorizing (good and complex) passwords quickly becomes a burden if not yet impossible. To overcome the memory issue, there exists a variety of tools, which allow to store passwords and associated metadata in password stores. Typically, a password manager application consists of a password file, which contains the passwords and the metadata in structured form, and an application, assisting the user in decrypting and encrypting the passwords. A typical example is Keepass, which is an open source password management application. Keypass uses a master password in order to encrypt the password file. An additional key file can be used in order to increase security by requiring a second factor to open the password database. There exists a very large variety of ports of this software, which allow to open, edit and store passwords on virtually any platform. As the passwords are stored in a single file, a versioning mechanism is required, which allows to track changes in the passwords on all devices and merge them together in order to keep the synchronized. There also exist online services which handle versioned password storage, but obviously this requires to give away sensitive information and to trust the provider for handling the passwords safely. Storing the encrypted password file in a cloud drive such as Dropbox, Google Drive or Microsoft Azure also solves the versioning issue partially, but still the data is out there on foreign servers. For this reason, the new Raspberry Pi Zero is a low cost, low power device, which can be turned into a privately managed and reasonable secure, versioned password store under your own control.

What is needed?

- A Raspberry Pi (in fact, a Linux system of any kind, in this example we use a new Zero Pi)

- Power supply

- SD micro card

- USB Hub

- Wifi Dongle

- USB Keyboard

Preparing the Raspberry Pi Zero

The Raspbian operating system can be easily installed by dumping the image to the SD micro card. As the Zero Pi does not come with an integrated network interface, a Wifi dongle can be used for enabling wireless networking. You can edit the config file directly on the SD card, by opening it on a different PC with any editor, and provide the SSID and the shared secret already in advance.

# File /etc/wpa_supplicant/wpa_supplicant.conf

ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

update_config=1

network={

ssid="MyWIFI

psk="SECRET"

}

Then place the card again in the Pi and boot it with a keyboard and wifi dongle attached and the Pi connected to a screen. Boot the device and login with standard credentials, which are the user name pi and the password raspberry.

sudo adduser stefan # add new user

sudo apt-get install openssh-server git-core # Install ssh server and git

passwd # change the default password

sudo adduser stefan sudo # add the new user to the sudoers

In the next step, it is recommended to use a static IP address for the Pi, as we need to configure port forwarding for a specific IP address for the router in a later step. Open the interfaces file and provide a static IP address as follows:

# File: /etc/network/interfaces

allow-hotplug wlan0

iface wlan0 inet static

address 192.168.0.100

netmask 255.255.255.0

gateway 192.168.0.1

wpa-conf /etc/wpa_supplicant/wpa_supplicant.conf

You can then remove the HDMI cable and also the keyboard, as now SSH is available via the static IP address we just defined above. The next step covers the installation of the [Git server][1] and the configuration of public key authentication.

sudo adduser git # add a new git user

su git # change into the git account

cd /home/git # change to home directory

mkdir .ssh # create the directory for the keys

chmod 700 .ssh # secure permissions

touch .ssh/authorized_keys # create file for authorized keys

chmod 600 .ssh/authorized_keys # secure permissions for this file```

We are now ready to create a key pair consting of a private and a public key. You can do this on your normal pc or on the Pi directly.

ssh-keygen -t rsa # Create a key pair and provide a strong password for the private key```

Note that you can provide a file name during the procedure. The tool creates a key pair consisting of a private and a public key. The public key ends with the suffix pub.

# Folder ~/Passwordstore $ ll

insgesamt 32

drwxr-xr-x 2 stefan stefan 4096 Mär 13 22:40 .

drwxr-xr-x 10 stefan stefan 4096 Mär 13 22:38 ..

-rw------- 1 stefan stefan 1766 Mär 13 22:40 pi_git_rsa

-rw-r--r-- 1 stefan stefan 402 Mär 13 22:40 pi_git_rsa.pub

If you created the key files on a different PC than the Pi, you need to upload the public key to the Pi. We can do this with the following command:

cat ~/Passwordstore/pi_git_rsa.pub | ssh git@192.168.0.100 "cat >> ~/.ssh/authorized_keys"```

If you generated the keys directly on the Pi it is sufficient to cat the key into the file directly. After you managed this step, verify that the key has been copied correctly. If the file looks similar like the following example, it worked.

git@zeropi:~/.ssh $ cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDZ7MZYvI……..wnQqchM1 stefan@pc

We can the test key based SSH authentication with the following command.

ssh -i pi_git_rsa git@192.168.0.100 # connect with using the private key```

You are then prompted to connect to the Pi by using the private key password you specified earlier. Note that this password differs from the one we created for the git user. A less secure but more convenient solution is to leave the password empty during the key pair creation process. If the password has not been set, then everyone can connect to the Pi who gets hold of the private key. By the way, additional interesting facts about passwords can be found here.

In order to increase convenience, you can add a short cut for this connection, by editing the /home/user/.ssh/config file. Simply add the following record for the password store SSH connection.

Host passwords

hostname 192.168.0.100

port 22

user git

IdentityFile /home/stefan/passwort/pi_git_rsa

Now you can connect to the Pi by typing the following command `ssh passwords . Note that now you need to provide the password for the key file instead of the user password. Delete the pre-installed user pi from the system:

sudo userdel pi```

The default Raspbian partition configuration only utilises 2 GB of your SD card. This can become insuficient quickly. There exists a convenient tool which allows to increase the root partition to the full size of your SD card. Simply run the following command and select the appropriate menu item.

<span class="lang:default decode:true crayon-inline ">sudo raspi-config</span>

## Prepare the Git Repository

In the following, we create an empty git repository which we will use for versioning the password database from Keepass.

mkdir Password-Repository

git@zeropi:~ $ cd Password-Repository/

git@zeropi:~/Password-Repository $ git init –bare

Initialisierte leeres Git-Repository in /home/git/Password-Repository/

The repository on the Pi is now ready for ingesting the passwords.

## Checkout the new Repository on your PC and add the Password File

Now that the repository is inititalized, we can start versioning the password file with git. Clone the repository and add the password file to git, by copying the password file into the cloned repository directory.

git clone passwords:/home/git/Password-Repository

cp /home/user/oldLocation/Password-Database.kdb ~/Password-Repository

git add Password-Database.kdb

git commit -m “initial commit”

The last step is to push the newly committed password file to the remote repository. You can improve the security by not adding the key file for KeePass into the repository.

git push origin master```

The basic setup is now completed and you can clone this repository on any device, in order to have the latest password file available.

Checkout the Password Repository on Your Phone

There exists a variety of Git clients for Android, which can deal with identity files and private key authentication. I have good experience with Pocket Git. Clone the repository by using the URL like this:

ssh://git@pi.duckdns.org:1234/home/git/Password-Repository```

### Versioning the Password File: Pull, Commit and Push

Handling versions of the password file follows the standard git procedure. The only difference is, that in contrast to source code files for which git is usually used for, the encrypted password database does not allow for diffs. So you cannot find differences between to versions of the password database. For this reason, you need to make sure that you get the latest version of the password database before you edit the file. Otherwise you need to merge the file manually. In order to avoid this, follow these steps from within the repository everytime you plan additions, edits or deletes of the password database.

1. git pull

2. \## make your changes

3. git commit -m “describe your changes”

4. git push

## Enabling Remote Access

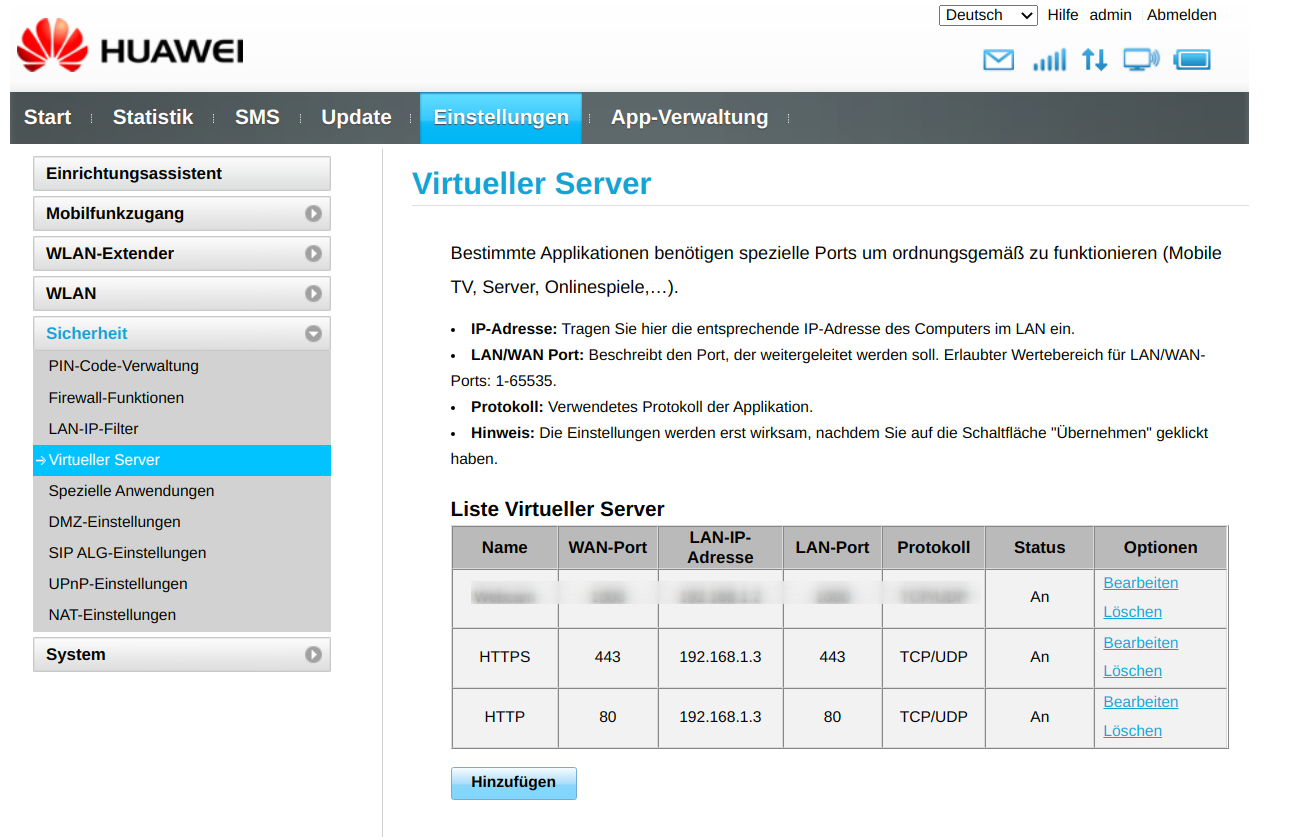

You can already access the Git repository locally in your own network. But in order to retrieve, edit and store passwords from anywhere, you need to enable port forwarding and Dynamic DNS. Port forwarding is pretty easy. Enter your router’s Web interface, browse to the port forwarding options and specify an external and internal port which points to the IP of the Raspberry Pi.

* IP Address 192.168.0.100

* Internal port 22

* External port (22100)

* Protocol: both

Now the SSH service and therefore the Git repository becomes available via the external port 22100. As we left the internal port at the default, no changes for the SSH service are required.

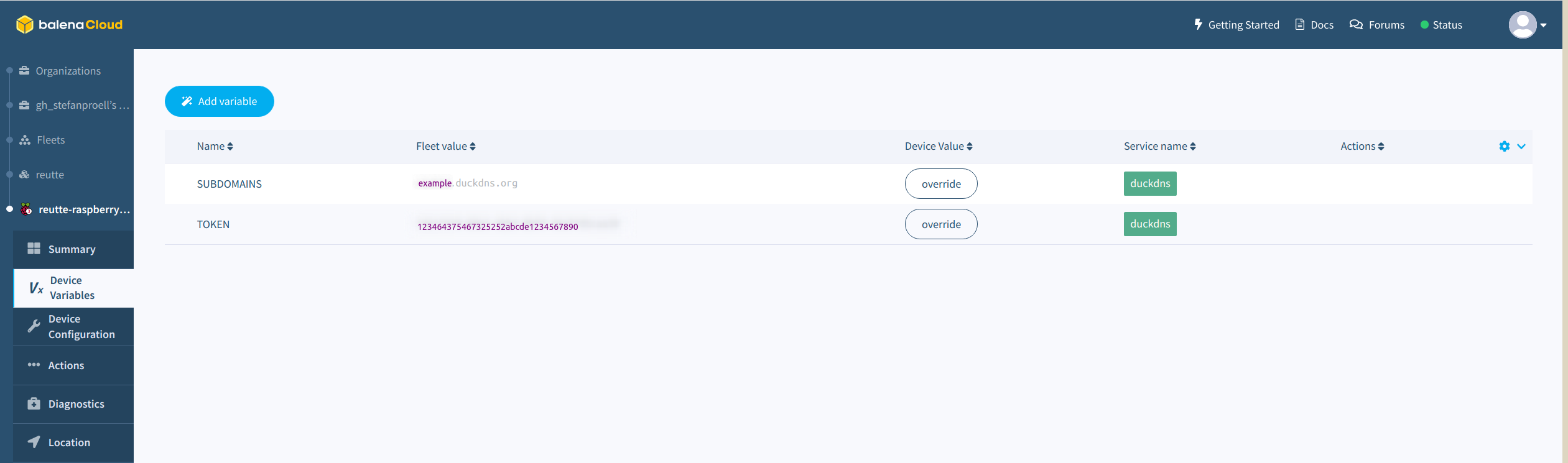

For Dynamic DNS I regularly use <a href="http://www.duckdns.org" target="_blank">Duck DNS</a>, which is a free service for resolving dynamic IP addresses to a static host name. After registering for the service, you can choose a host name and download the installer. There exists an installer particularly for the <a href="https://www.duckdns.org/install.jsp" target="_blank">Raspberry Pi</a>. Follow this instructions and exchange the token and the domain name in the file to match your account. You can now use the domain you registered for accessing the service from other machines outside your network.

## Security Improvements

The setup so far is reasonably secure, as only users having the key file and its password may authenticate with the Git repository user. It is in general good practice to disallow root to connect via SSH and to restrict remote access. Ensure that all other users on the system can only connect via SSH if and only if they use public key based authentication. Always use passwords for the key file, so that if someone should get hold of your keys, the still require a password.

You can also disable password login for the user git explicitly and allow passwords for local users. Add these lines in the sshd config file.

Match User git

PasswordAuthentication no

Match address 192.168.0.0/24

PasswordAuthentication yes

If you know the IP addresses where you will update the password file in advance, consider limiting access only to these. The git user can authenticate with the key, but still may have too many privilieges and also could execute potentially harmful commands. Ensure that the git user is not in the list of superusers:

grep -Po ‘^sudo.+:\K.*$’ /etc/group```

The user git should not be in the output list. In order to limit the commands that the git user may execute, we can specify a list of allowed commands executable via SSH and utilise a specialised shell, which only permits git commands. Prepend the public key of the git user in the authorised_keys file as follows:

no-port-forwarding,no-agent-forwarding ssh-rsa AAAAB ........```

In addition, we can change the default shell for the user git. Switch to a different user account with sudo privileges and issue the following command:

sudo usermod -s /usr/bin/git-shell git```

This special shell is called git-shell and comes with the git installation automatically. It only permits git specific commands, such as push and pull, which is sufficient for our purpose. If you now connect to the Pi with the standard SSH command, the connection will be refused:

stefan $ ssh passwords

Enter passphrase for key '/home/passwort/pi_git_rsa':

The programs included with the Debian GNU/Linux system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

Last login: Sun Mar 20 20:48:04 2016 from 192.168.0.13

fatal: Interactive git shell is not enabled.

hint: ~/git-shell-commands should exist and have read and execute access.

Connection to 192.168.0.100 closed.

Firewall

The Uncomplicated FireWall (ufw) is way less comlex to setup than classic IP tables and provides exactly what the name implies: a simple firewall. You can install and initialize it as follows:

sudo apt-get install ufw # Insatall it

sudo ufw default deny incoming # Deny all incoming traffic

sudo ufw allow ssh # Only allow incoming SSH

sudo ufw allow out 80 # Allow outgoing port 80 for the Duck DNS request

sudo ufw enable # Switch it on

sudo ufw status verbose # Verify status```

The great tutorials at <a href="https://www.digitalocean.com/community/tutorials/how-to-setup-a-firewall-with-ufw-on-an-ubuntu-and-debian-cloud-server" target="_blank">Digital Ocean</a> provide more details.

## Conclusion

In this little tutorial. we installed a Git server on a Raspberry Pi Zero (or any other Linux machine) and created a dedicated user for connecting to the service. The user requires a private key to access the service and the git server only permits key based logins from users other than users from the local network. The git user may only use a restricted shell and cannot login interactively. The password file is encrypted and all versions of the passwords are stored within the git repository.

<div class="twttr_buttons">

<div class="twttr_twitter">

<a href="http://twitter.com/share?text=A+Reasonable+Secure%2C+Self-Hosted+Password+Database+with+Versioning+and+Remote+Access" class="twitter-share-button" data-via="" data-hashtags="" data-size="default" data-url="https://blog.stefanproell.at/2016/03/20/a-reasonable-secure-password-database-with-versioning-and-remote-access/" data-related="" target="_blank">Tweet</a>

</div>

<div class="twttr_followme">

<a href="https://twitter.com/@stefanproell" class="twitter-follow-button" data-show-count="true" data-size="default" data-show-screen-name="false" target="_blank">Follow me</a>

</div>

</div>

[1]: https://git-scm.com/book/en/v2/Git-on-the-Server-Setting-Up-the-Server